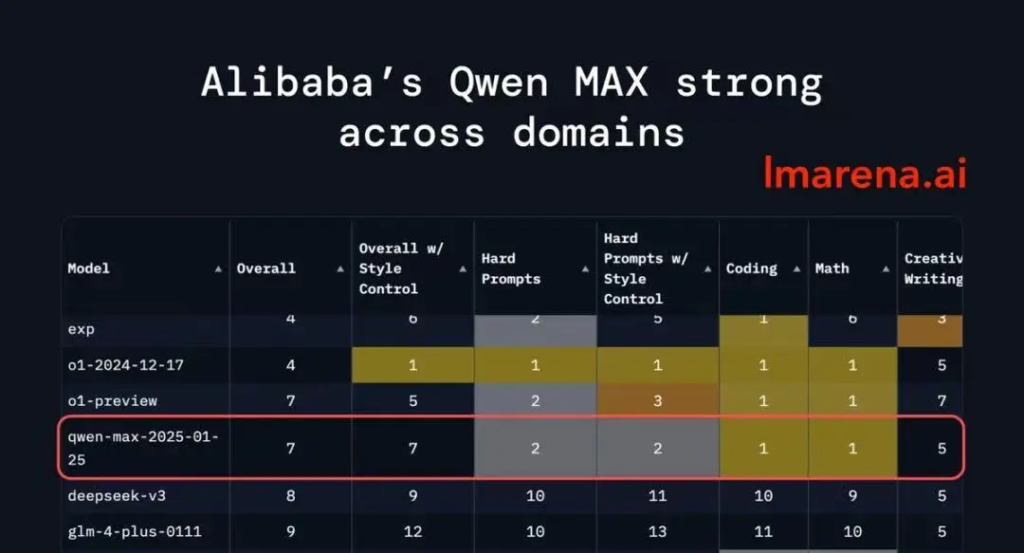

In the early morning of yesterday (February 4), the Chatbot Arena LLM Leaderboard updated the latest issue of the list, and the Qwen2.5-Max released not long ago directly entered the top ten, surpassing models such as DeepSeek V3, o1-mini and Claude-3.5-Sonnet, ranking seventh in the world with 1332 points! Meanwhile, Qwen2.5-Max ranked first in math and programming and second in hard prompts.

https://lmarena.ai/?leaderboard

Qwen-Max is the latest exploration of the MoE model by the Alibaba Cloud Tongyi team, and the new model shows extremely strong comprehensive performance. In mainstream benchmarks such as Arena-Hard, LiveBench, LiveCodeBench, GPQA-Diamond, and MMLU-Pro, Qwen2.5-Max is on par with Claude-3.5-Sonnet, and almost comprehensively surpasses GPT-4o, DeepSeek-V3, and Llama-3.1-405B.

ChatBot Arena’s official account lmarena.ai commented that Alibaba’s Qwen2.5-Max performed strongly in many areas, especially in the technical field (programming, mathematics, difficult prompts, etc.).

It is understood that Chatbot Arena is a large model performance testing platform launched by LMSYS Org, which currently integrates more than 190 models. The list uses an anonymous method to team up large models in pairs and hand them over to users for blind testing, and users vote on the model’s ability based on real dialogue experience. Therefore, the Chatbot Arena LLM Leaderboard has become the most important arena for the world’s top large models.

Previously, after the release of Qwen2.5-72B-Instruct, it also broke into the top 10 in the world on the Chatbot Arena list, and is a Chinese large model with a high score; Qwen2-VL-72B-Instruct broke into the ninth place on the Vision list, and is an excellent open source model.

Currently, enterprises can call the API of the Qwen2.5-Max model in Alibaba Cloud Bailian, and developers can also experience Qwen2.5-Max for free in the Qwen Chat platform.

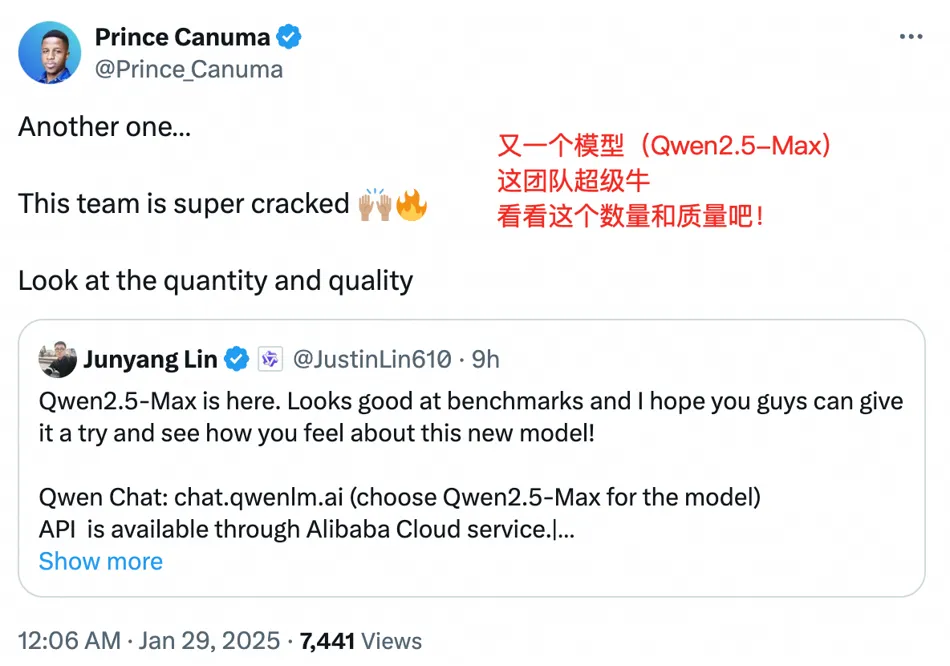

After the release of Qwen 2.5-Max, it attracted a lot of attention among overseas developers. Some netizens highly praised the excellent performance of Qwen2.5-Max after comparing DeepSeek-V3 and Qwen 2.5.

Some netizens jokingly worried about OpenAI’s CEO Sam Altman: Another Chinese model is coming

Many overseas netizens said that the iteration speed and quality of China’s new model are amazing.

As one of the earliest technology companies in China to open source self-developed large models, Tongyi Qianwen, a subsidiary of Alibaba Cloud, has realized full-scale and full-modal open source, and has launched various types of open source models, including language large models and multi-modal large models.

Globally, Qwen has more than 90,000 derivative models, surpassing Llama to become the world’s largest group of open source models. The release of Qwen 2.5-max has also been well received by developers in various languages around the world.

“With Qwen2.5-max, can we say goodbye to ChatGPT?!” Some netizens who use Arabic said.

A number of overseas netizens expressed their amazement at the extreme performance of Qwen2.5-max in English.

The Tongyi team said that continuously improving the scale of data and model parameters can effectively improve the intelligence level of the model. In addition to continuing to explore pre-trained scaling, the Tongyi team will also invest heavily in reinforcement learning scaling, hoping to achieve intelligence beyond humans and drive AI to explore the unknown.

Entering China

Entering China